We Got Everything

You Are Searching For!

SUCCESS STORIES

We Got Everything You Are Searching For!

- Find profitable niches easily

- Find profitable niches easily

- Discover easy to rank for keywords

- Discover easy to rank for keywords

Latest Blogs

New From AffiliateBay

We help you keep up with articles featuring in-depth reviews, expert insights, and exclusive tips on the latest affiliate marketing strategies, tools, and trends.

How to Pitch for Guest Blogs?

SEO Friendly Pagination 2024: Best Practices To Be Followed

9 Best Legal Affiliate Programs In 2024 To Earn Passive Money

5+ SEO Tactics To Boost Organic Traffic & Rankings In 2024

What Are Anchor Texts 2024? How To Write A Good Anchor Text?

Guides

Turning your ideas into reality means working on the technical parts. This is when your blog really starts to come together.

Affiliate Programs

Here are the lists of best affiliate programs that you can join and monetize your website as per your niche.

Popular Niche Business Tools

Semrush

5/5

SEMrush is a comprehensive SEO and marketing tool for research, analytics, and optimization of online strategies.

Jungle Scout

5/5

Jungle Scout is an essential Amazon seller tool for product research, tracking, and data-driven decisions to boost sales.

Bright data

4.9/5

Bright Data is a versatile web data collection platform for businesses, providing reliable and ethical data harvesting solutions.

Thinkific

5/5

Thinkific is a user-friendly online course creation and management platform empowering educators to monetize their knowledge effectively.

Explore More Categories

Take The 28 Day SEO Challenge Now

Steal Your

SEO STRATEGY

Download my 2x intelligent spreadsheets to steal your competitors SEO strategy now!

Take The 28 Day SEO Challenge Now

The 7 Day Ecommerce SEO Strategy To Increase Your Search Traffic!

Download my 2x intelligent spreadsheets to steal your competitors SEO strategy now!

What Our Readers Say About Us...

Hi, from AffiliateBay Team

We’re a team that runs successful affiliate websites, and we’re here to share what we’ve learned. Our team shares practical tips and advice for successful affiliate marketing based on our experience in running affiliate websites. We review various products and services, providing unfiltered opinions to help you make informed purchasing decisions.

POPULAR CONTENT

Browse Our Categories

HOSTING

Looking for a new web hosting? Now save a great deal of money using our special coupon codes and deals on the most popular hosting platforms.

EDUCATION

Learn about the top online courses and test preparation. Explore the best deals and honest reviews of popular education platforms and courses.

SOFTWARE COUPON

Get today’s best deals and offers on popular software, services, and online platforms. Save BIG with our new updated coupons.

REVIEW

Explore the word of latest technology with help of our in-depth guides on trending gadgets and tools.The Best Of Affiliatebay

VoiPo Review 2024: How is VoiPo Customer Service? Is VoiPo a Good Provider? VoiPo International Calling

Updated on: August 19, 2023

Control D Review 2024: The #1 dns software to take control of your internet

Updated on: July 7, 2023

Speechelo Review 2024: How Powerful Is This AI Text to Speech Software?

Updated on: September 9, 2023

Thrive Quiz Builder Review 2024– Popular Quiz Plugins For WordPress

Updated on: August 12, 2023

Advanced Mobile Care Security Review 2024: Is It Worth?

Updated on: August 26, 2023

{Latest 2024} Best Casino Affiliate Programs To Make MORE Money

Updated on: June 29, 2023

Navigating the B2B Affiliate Marketing Landscape: Tips and Tactics for Growth

Updated on: September 23, 2023

Is Affiliate Marketing Still Worth in 2024? Can You Really Make Money With Affiliate Marketing?

Updated on: January 13, 2024

PropellerAds ALO 2024– What You Need To Know About This Event?

Updated on: August 13, 2023

Pruning Your Website for SEO In 2024– The What, Why, and How?

Updated on: March 15, 2024DX3 USA 2024: The Best Retail, Marketing & Tech Event

Updated on: August 19, 2023

Bigrock Hosting Pricing 2024 | Does BigRock offer hosting?

Updated on: September 22, 2023

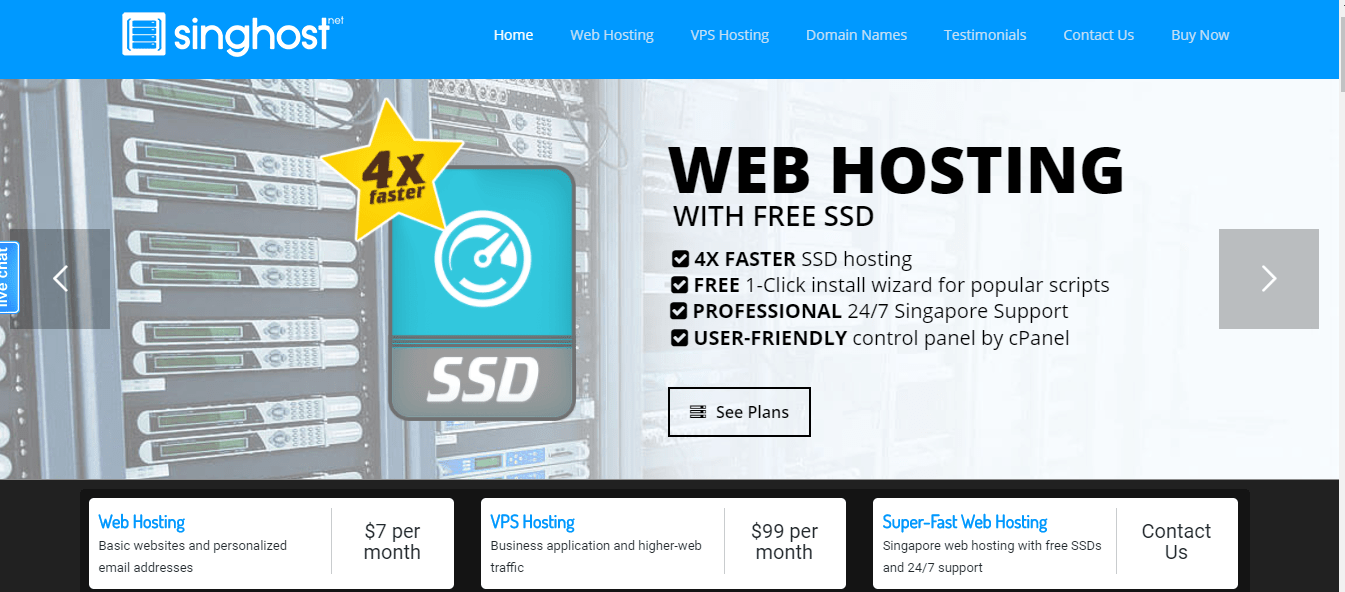

Best Web Hosting Service Providers In Singapore (@ $2.95/mo) 2024

Updated on: September 11, 2023

Inmotion Hosting Pricing | How much does InMotion cost?

Updated on: September 4, 2023

Namesilo Alternatives 2024– Handpicked For Your Business Needs!

Updated on: August 30, 2022

EasySpace Pricing 2024– Choose Perfect Plan For Hosting!

Updated on: August 21, 2023

A2 Hosting Review With Special Coupon Code- 51% OFF 2024

Updated on: September 12, 2023

CrakRevenue Review 2024: #1 Adult CPA Network to Make $$$$

Updated on: August 27, 2023

ROI Collective Review: Premium Affiliate Network in the Financial Verticals?

Updated on: September 24, 2023

10 Best Gambling Ad Networks 2024: Make Money With Gambling!

Updated on: September 2, 2023

Newor Media Review 2024– Is It The Best Alternative To Google AdSense?

Updated on: September 18, 2023

HilltopAds Review 2024: The Best Pop-Under Ad Network?

Updated on: September 1, 2023

19 Best Affiliate Networks For Earning Passive Income 2024

Updated on: September 25, 2023

The Best Lifestyle Blog Post Ideas 2024– Some Interesting Facts About Blogging!

Updated on: August 14, 2023

Top 20 Best Email Marketing Blogs 2024: Your Guide to Email Marketing Success!

Updated on: July 4, 2023

10 Best Anonymous Blogging Platforms 2024: Ultimate Guide

Updated on: August 28, 2022

Top 15 Best Lifestyle Blogs 2024 : Model For Success

Updated on: August 11, 2023

Google AdSense Banner Sizes 2024 : Top 10 AdSense Formats for Maximum Earnings

Updated on: August 3, 2023

How To Start Mom Blog In 2024?- Earn Passive Income Now

Updated on: March 19, 2024We have been featured on